Here's a question making the rounds in SEO circles: Should you be serving markdown-formatted pages specifically to LLM crawlers like ChatGPT and Perplexity?

Google's answer is clear: and it might save you a lot of unnecessary work.

Google Says: Don't Bother With LLM-Only Markdown Pages

John Mueller, Google Search Advocate, recently addressed the trend of publishers creating separate markdown or JSON pages exclusively for large language models. His take? It's unnecessary: and potentially risky.

Mueller put it bluntly: "LLMs have trained on – read & parsed – normal web pages since the beginning, it seems a given that they have no problems dealing with HTML. Why would they want to see a page that no user sees?"

The logic is straightforward. LLMs have successfully parsed billions of HTML pages since they started crawling the web. ChatGPT, Perplexity, Google's AI Overviews: they've all been digesting standard HTML without issue. Creating "shadow" content that exists only for bots introduces complexity, maintenance overhead, and potential inconsistencies between what users see and what AI systems consume.

Why LLM-Only Content Is a Bad Bet

Creating separate markdown versions of your content introduces several problems:

Duplicate maintenance burden. Every time you update a page, you'll need to sync changes across multiple versions. Miss one update, and you're serving inconsistent information to different audiences.

Cloaking concerns. Serving different content to bots than to users has historically been a red flag in Google's webmaster guidelines. While the context here is different, you're still creating content that real visitors never see.

No guaranteed advantage. Mueller noted that performance differences between pages likely don't stem from file format choices. If your content isn't performing well with AI systems, the problem is probably structural or semantic: not whether you're using HTML tags or markdown syntax.

Format assumptions don't scale. Different AI platforms have different parsing capabilities. What works for one LLM might not work for another. Building around assumptions about format preferences means you're constantly chasing moving targets.

What Actually Moves the Needle for AI Visibility

If markdown pages aren't the answer, what is? Mueller's guidance points back to fundamentals: the same principles that have always made content accessible, regardless of who (or what) is consuming it.

Improve your existing HTML pages. Focus on speed, readability, and logical content structure. If a human can easily scan and understand your page, an AI system will have no trouble either. This means clear headings, concise paragraphs, and information organized in a logical hierarchy.

Clean up your information architecture. AI systems: like users: navigate through internal links and site structure. If your pages are buried six clicks deep or connected through convoluted paths, they're less likely to be surfaced or cited. Strong internal linking isn't just an SEO play; it's fundamental to AI discoverability.

Use structured data strategically. Here's where format does matter: but not in the way most people think. Mueller specifically mentioned that when a platform has clearly defined requirements (like OpenAI's eCommerce product feeds using specific JSON schemas), you should absolutely provide that structured data. But that's different from creating alternate versions of every page on your site. Implement schema.org markup, use proper JSON-LD for relevant page types, and ensure your structured data accurately reflects your content.

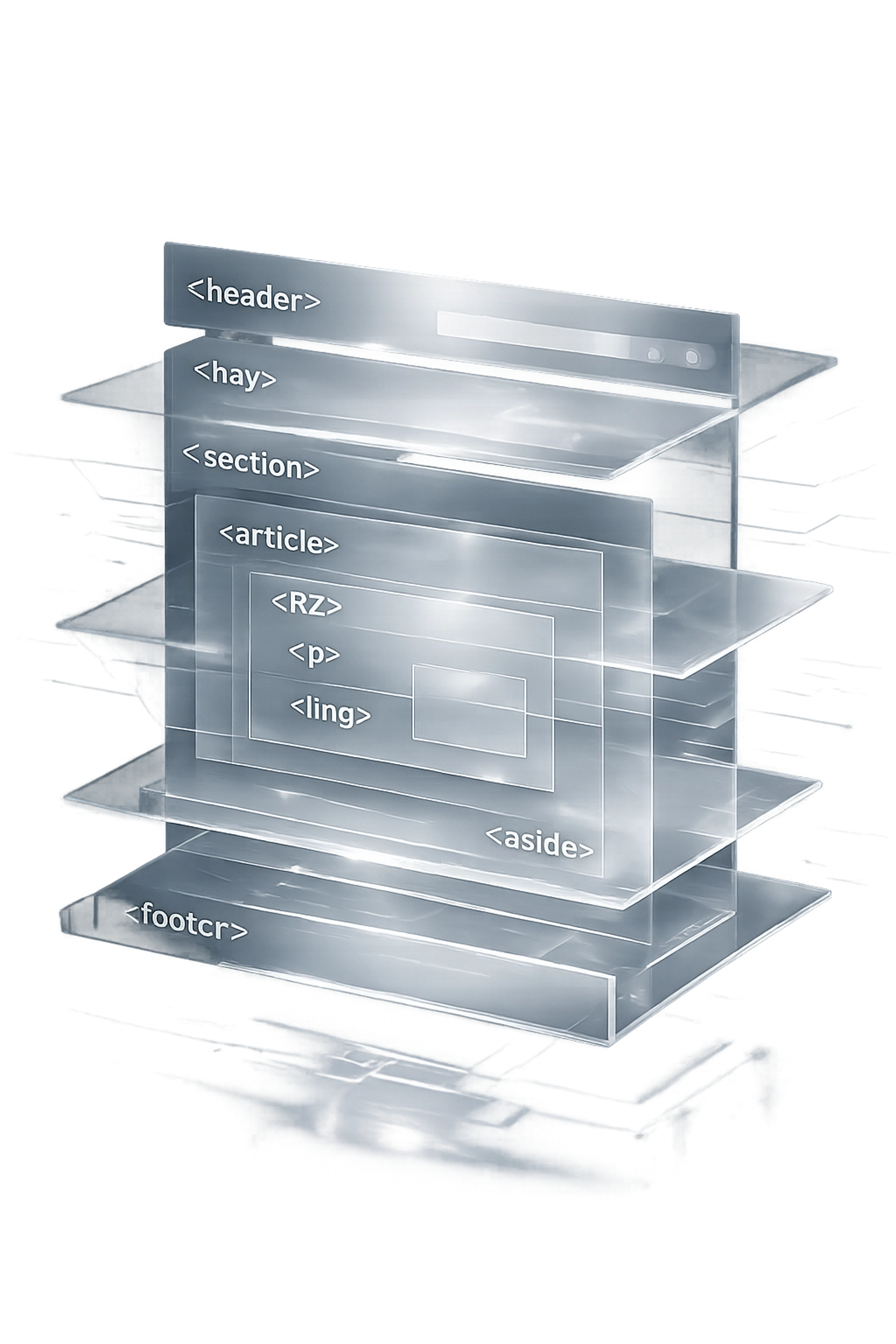

Format for both humans and machines. Use semantic HTML properly. That means actual <h1>, <h2>, and <h3> tags for headings, not just styled <div> elements. Use lists where appropriate. Mark up navigation clearly. These aren't new recommendations: they're web standards that make content universally accessible.

Address JavaScript cautiously. Mueller noted that JavaScript remains a challenge for many AI systems. If your critical content lives exclusively in client-side rendered JavaScript, you're making it harder for both search engines and AI platforms to access it. Progressive enhancement: where your core content is available in HTML and JavaScript adds functionality: remains the safest approach.

How We Actually Track AI Visibility

The conversation about LLM-optimized content often skips a crucial question: How do you even know if what you're doing is working?

This is where measurement becomes essential. At Expert SEO Consulting, we track AI visibility through Citemetrix, which monitors how and where your content appears across AI-powered search platforms. We're not guessing whether ChatGPT or Perplexity cite your pages: we're tracking it.

Our AI SEO Audits identify the structural and semantic issues that actually prevent AI systems from understanding and citing your content. We're looking at:

- Content structure and hierarchy – Can an AI system quickly identify your main points and supporting evidence?

- Entity clarity – Are you clearly establishing what your page is about using proper semantic markup and entity references?

- Citation-worthiness – Does your content include the kind of specific, factual information that AI systems are trained to surface?

- Technical accessibility – Are there crawling, rendering, or parsing issues preventing AI systems from accessing your content?

The Real Problem Isn't Format: It's Fundamentals

Full disclosure: Most sites struggling with AI visibility aren't losing out because they're using HTML instead of markdown. They're losing because their content lacks clear structure, their information architecture is a mess, or they're not establishing topical authority through internal linking and entity relationships.

Creating a markdown version of a poorly structured page doesn't fix the underlying problem: it just duplicates it.

The businesses winning in AI-powered search are the ones who've been doing solid SEO work all along: clear content hierarchies, strong internal linking, proper structured data implementation, and pages optimized for both speed and comprehension.

What You Should Do Right Now

Instead of building parallel markdown pages, audit what you already have:

Run a content structure audit. Can a reader (or an AI) quickly scan your page and understand your main points? Are your headings descriptive? Is your most important content in the first half of the page?

Check your internal linking. Are your cornerstone content pieces clearly connected? Do you have orphan pages that exist in isolation?

Verify your structured data implementation. Is it present? Is it accurate? Does it reflect your actual content, or is it aspirational wishful thinking?

Test your page speed and rendering. If your pages take five seconds to load or rely heavily on JavaScript for core content, you're creating barriers for both users and AI systems.

Establish measurement. You can't improve what you don't measure. Start tracking where your content appears in AI-powered search results—and use tools like Citemetrix to monitor citations and visibility across AI-powered platforms.

This isn't about building something new: it's about fixing what's already there. The good news? The fundamentals that make your content accessible to AI systems are the same fundamentals that have always made content perform well in search.

AI Visibility Doesn't Require Shadow Pages

Google's stance on markdown for LLMs should be a relief: You don't need to build and maintain separate versions of your content for AI consumption. What you need is well-structured, semantically clear HTML that both humans and AI systems can easily parse.

The path to AI visibility runs through the same territory that's always mattered in search: clean code, logical structure, proper markup, and content organized for comprehension. If you've been doing SEO right, you're already most of the way there.

Need help identifying what's actually blocking your AI visibility? Our AI SEO Audits examine your content through the lens of AI discoverability, and we track your performance across AI platforms with Citemetrix. We're not guessing: we're measuring.

Book a consultation to see where your content stands in the age of AI-powered search.