Mike King doesn’t do subtle. The two-time Search Marketer of the Year (no one else has won it twice, as he reminded us) opened his Tech SEO Connect presentation with an “I told you so”—and then spent the next 40 minutes backing it up with data, tools, and a roadmap for where SEO needs to go.

His core argument: query fanout is the mechanism that determines visibility in AI search, and our industry’s major tools haven’t caught up. We got the leaked Google documents. We got the leaked data. We have more information about how search works than ever before. And yet, King pointed out, our software is still talking about text-to-code ratio.

“What are we doing here?” he asked. Good question.

The Prediction That Came True

Back in 2023, before SGE became AI Overviews, King wrote an article for Search Engine Land explaining how the system worked and why retrieval augmented generation was the future. He built his own RAG pipeline using a SERP API, LlamaIndex, and the ChatGPT API to replicate what Google was doing.

He projected that AI Overviews would cause a 20-60% click-through rate loss, which we’re now seeing across the industry. He even did a specific projection for NerdWallet: 30.81% traffic loss. A couple months ago, they hit 37.3%.

King admitted he got two things wrong in that original analysis. He thought you could rank anywhere in the SERP and appear in AI Overviews. And he thought large context windows meant comprehensive pages would stay in subsequent conversation turns. What he didn’t know about at the time was query fanout.

What Is Query Fanout?

Query fanout is the process by which AI systems take a user’s prompt and extrapolate it into multiple synthetic queries that run in the background. Those queries retrieve different content chunks, which are then fed to the language model for synthesis.

King’s analogy: AI search is like a raffle. We don’t control the synthesis pipeline—all we control is the inputs. The more of these synthetic subqueries you rank for, the more raffle tickets you have.

The systems compare all the retrieved passages against each other to decide which ones to use. If you’re not being retrieved for any of those fanout queries, you’re not in the raffle at all.

The Data That Should Scare You

King shared several studies that quantify the disconnect between traditional SEO success and AI visibility:

A Ziptie study from May found that ranking in Google’s top 10 gives you only a one-in-four chance of appearing in Perplexity or ChatGPT. “I can’t run a business on a one in four chance,” King said.

Data from Profound showed just 19% overlap between ranking in Google and appearing in ChatGPT. And 62% of ChatGPT citations come from outside Google search entirely—from training data or direct pipelines.

The good news from that Profound data: there’s no position bias in ChatGPT. You don’t have to be position one or two. If you’re being retrieved, your content is considered equally. And if a URL ranks for multiple fanout queries, or if your domain ranks for many of them, you have a higher likelihood of appearing in the final response.

For Gemini specifically, data from the Sierra Interactive team shows an average of 10.7 queries per prompt for fanout, with up to 28 queries possible. The queries average 6.7 words but can be as long as 13.

Here’s the kicker: 95% of the queries in query fanout have no monthly search volume in traditional keyword tools. The platforms aren’t showing you these queries because they’re synthetically generated. This is why “just use People Also Ask” isn’t sufficient—these queries don’t exist in conventional keyword research.

How Query Fanout Actually Works

King walked through the technical process. It starts with intent classification, where the system determines the information domain, subdomain (taxonomy), user task, and runs safety checks. Then comes slot identification—filling in implicit and explicit variables for what the user is looking for.

The system does latent intent projection, plotting queries in multidimensional space to find related ones. It rewrites and diversifies queries for broader retrieval. It speculates on questions the user might be asking. And critically, it routes queries to expected content formats—index results, APIs, images, videos, and so on.

“This is one of the key things you need to understand,” King emphasized. “It can’t just be random content on your site. They have expectations for formats.” If your content doesn’t match the expected format for a query, you’re unlikely to win it—unless nothing else fits either.

The system also makes cost and budgeting decisions on the fly. API calls are expensive at scale, so it might run cheaper lexical (BM25) queries instead of semantic ones when it can.

Where to Find Query Fanout Data

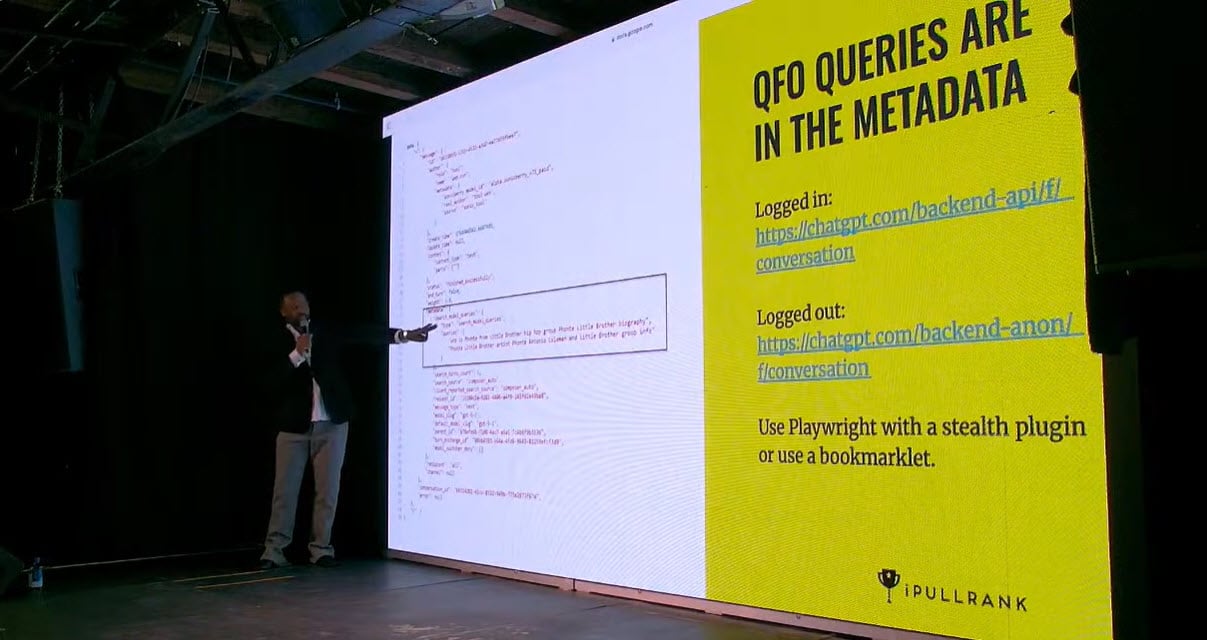

King showed exactly how to extract this data yourself. For ChatGPT, go to the network tab in your browser’s developer tools, look for the “conversations” endpoint, and find the “search_model_queries” in the metadata. That’s where the fanout queries live. The same endpoint shows you the search results that were used, including titles, URLs, domains, and snippets.

For Gemini, you can use their grounding API with the Google search function. King’s Gemini Grounding Detector tool shows whether a query triggered grounding, what queries were generated, and what sources were used.

If you don’t trust synthetically generated queries, King offered an alternative he calls “reverse intersect”: get all the citations in an AI Overview, look up all the keywords those URLs rank for, and find where they intersect. Those are likely the queries that were used.

The Tools King Built (Because No One Else Did)

Frustrated by the lack of industry tools, King built his own and open-sourced them:

Qforia generates query fanout in the same way Google does, based on patents King studied. It produces queries across six categories: related, implicit, comparative, personalized, reformulation, and entity-expanded. It also includes routing information—what content format is expected for each query. The tool has 48 GitHub forks so far.

Chunk Daily chunks your content and shows how different chunks score against your queries. King noted it’s strange that there’s no public index of embeddings across the web—we have link indexes, but nothing for the semantic layer that’s now central to search.

Relevance Doctor shows your scoring across different content chunks, helping with passage optimization.

King also pointed to third-party tools with query fanout capabilities: Demand Sphere was first to market for ChatGPT data, PromptWatch includes queries in their response details, and Profound has the most robust version with metrics on query variations over time and diffs between runs. Market Brew has a Content Booster tool that generates content for gaps based on fanout queries.

Passage Optimization and Semantic Triples

King’s tactical advice focused on how to actually win in the retrieval process. Headings matter more than ever—insights from Google’s Discovery Engine API show that the hierarchy of headings (H1 through H3) can be included with chunks when the system evaluates them. Chunk size is limited to about 500 tokens.

He emphasized writing in semantic triples: subject, predicate, object. Instead of vague sentences like “The pros of buying a lake house are many,” write something like “A lake house provides weekend relaxation and rental income potential for homeowners.” The subject is clear, the relationship is explicit, the object is specific.

“Structured data isn’t just schema,” King said. “These systems are crawling the web and looking at semantic triples.” Schema markup is an explicit way to provide that structure, but the same subject-predicate-object logic applies to your prose.

His other tactical points: use specific, extractable data points; avoid ambiguity; write for readability. And remember that this isn’t just about your website—it’s about your entire content ecosystem. Reddit threads, YouTube videos, earned media. All of it is up for grabs in AI retrieval.

The Open Source Stack for AI SEO

King recommended building with open source tools rather than paying for expensive API calls that can spiral out of control. His stack:

Ollama for running open source language models locally. It has a chat interface like ChatGPT, can do RAG directly, auto-downloads models you don’t have, and runs fast if you have a decent GPU. King integrates it with Screaming Frog for embeddings and content audits.

N8N for workflow automation. It has 1,100+ integrations, can build APIs on your own machine, and has flexible human-in-the-loop features (it can message you on Slack before proceeding to the next step). There are thousands of pre-built workflow templates, including one for AI Overview analysis.

Crew AI for building autonomous agents that can plug into anything you want to automate.

His point: everything these AI platforms use has open source equivalents. You can build simulation tools where you make a change to your content, compare it against competitor content from your query fanout, and see the impact before you deploy.

My Takeaways

King’s talk was the most tool-heavy of the conference, and that’s exactly what this moment requires. We’ve been hearing about query fanout conceptually—King showed us how to actually extract the data and use it.

Here’s what I’m implementing:

1. Query fanout research is now mandatory. Traditional keyword research misses 95% of the queries that AI systems generate. Tools like Qforia, Profound, or the reverse intersect method need to be part of every content strategy.

2. Ranking in Google isn’t enough. A 19-25% overlap between Google rankings and AI citations means we’re playing a different game now. The goal is maximizing retrieval across fanout queries, not just ranking for head terms.

3. Format expectations matter. AI systems route queries to expected content types. If your content doesn’t match the format expectation, you lose the retrieval. This reinforces what Krishna said about understanding the pipeline.

4. Write in semantic triples. Subject-predicate-object structure isn’t just for schema markup. It’s how you write prose that AI systems can reason with. This connects directly to what Jori found about structure enabling logic.

5. Build your own tools. The major SEO platforms haven’t caught up. Open source options like Ollama and N8N let you build what you need without waiting for vendors to figure it out.

King closed with a provocation: “SEO irrevocably changed. We can keep calling it SEO and make less money, or call it something else and make a lot more.” Whether or not you change what you call it, the work has changed. Query fanout is the mechanism. The tools exist. Time to use them.