How Your Rendering Strategy Impacts Search Engines and AI Bots

For nearly two decades, JavaScript has been the engine behind interactive, dynamic web experiences. Single-page applications, real-time dashboards, and sophisticated e-commerce platforms all rely on JavaScript to deliver the kind of fluid, app-like experiences users have come to expect. But there’s a growing disconnect between what users see and what search engines and AI systems can actually read.

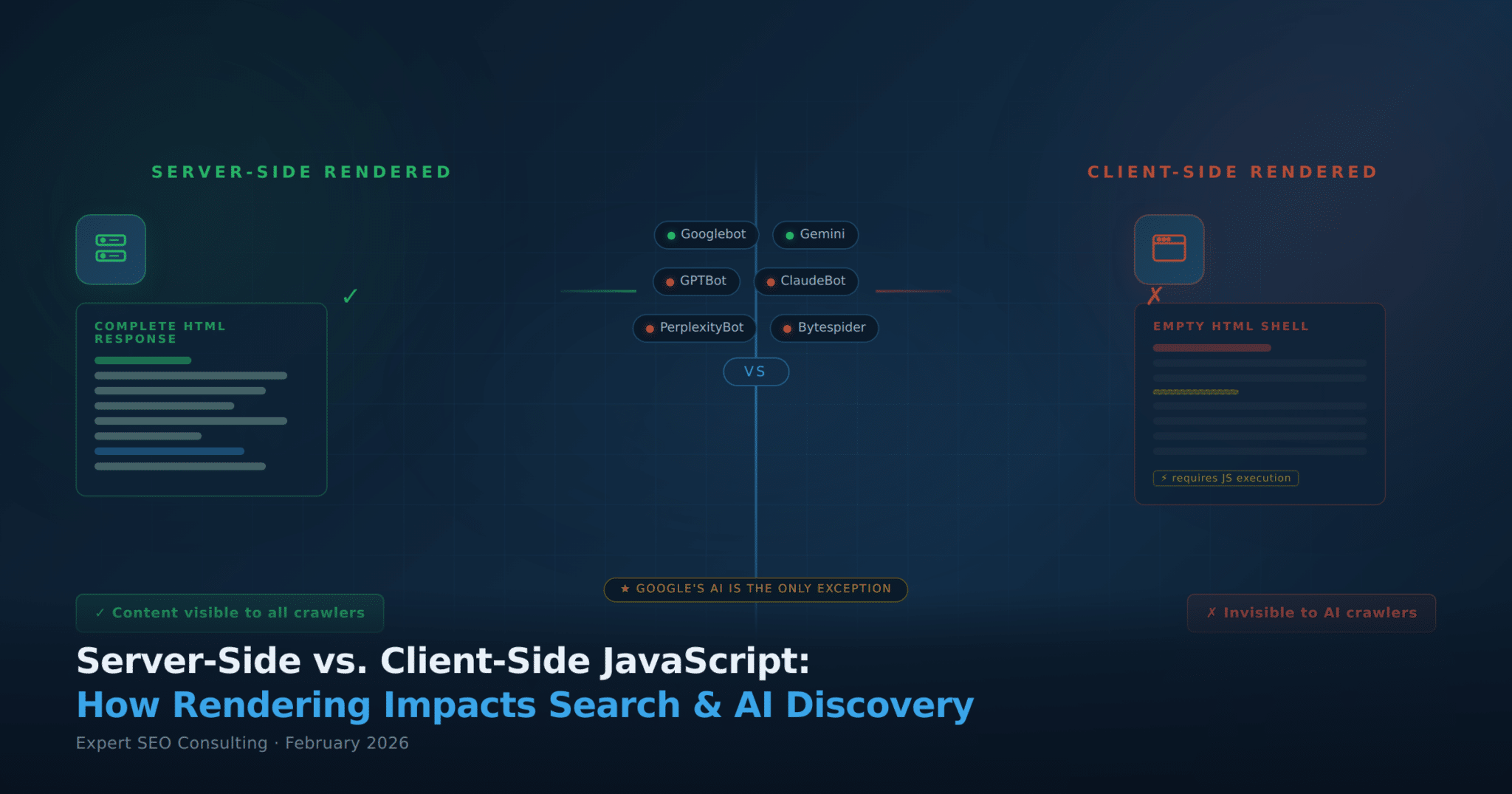

The way your website renders its content—whether on the server before it reaches the browser, or on the client after the page loads—has always mattered for SEO. But in 2026, the stakes are dramatically higher. Google’s AI Overviews, ChatGPT’s web browsing capabilities, Perplexity’s answer engine, and Claude’s search features are all reshaping how content gets discovered and cited. And the uncomfortable truth is that most of these AI systems cannot execute JavaScript at all.

This creates a two-tier web: sites that are visible to both traditional search and the emerging AI ecosystem, and sites that are effectively invisible to every discovery platform except Google.

If you’re a business owner, marketer, or developer making decisions about your site’s architecture, this is the most important technical SEO consideration you’ll face this year. Let’s break it down.

Understanding the Two Rendering Approaches

Server-Side Rendering (SSR)

With server-side rendering, your web server does the heavy lifting. When a crawler or a user’s browser requests a page, the server processes the data, merges it with the page template, and sends back fully formed HTML. By the time the content arrives at its destination—whether that’s a human’s browser or an AI bot’s fetch request—the page is already complete. Every heading, every paragraph, every product description is right there in the HTML.

This is how the web worked for most of its history. Static HTML pages, WordPress sites rendering PHP on the server, and classic content management systems all follow this pattern. Frameworks like Next.js, Nuxt, and SvelteKit have modernized the approach by combining server-side rendering with modern JavaScript capabilities, giving you the best of both worlds.

Client-Side Rendering (CSR)

Client-side rendering flips the model. The server sends a minimal HTML shell—often just a <div id=”app”></div> and a bundle of JavaScript files. The visitor’s browser then downloads and executes that JavaScript, which fetches data from APIs, builds the page’s content, and renders everything on screen. Until that JavaScript runs, the page is essentially empty.

This approach powers most single-page applications built with React, Angular, and Vue. It delivers exceptionally smooth, app-like user experiences with seamless page transitions, real-time updates, and complex interactive features. For dashboards, social platforms, and web-based tools, client-side rendering is often the natural choice.

The problem is what happens when the visitor isn’t a human with a full browser—but a bot with no JavaScript engine.

The Traditional SEO Case: Why SSR Has Always Had the Edge

Search engine crawlers have historically struggled with JavaScript. For years, Googlebot would fetch a page’s HTML and move on, never executing any scripts. Content hidden behind JavaScript was simply invisible to the index.

Google has made enormous strides. Googlebot now runs an evergreen version of headless Chromium and uses a two-phase crawling process: first it fetches the raw HTML, then it queues the page for full JavaScript rendering. Google’s Martin Splitt, a Developer Advocate on the Search team, has noted that the rendering queue processes pages remarkably fast—the 99th percentile completes within minutes, not weeks as some earlier studies suggested.

But “within minutes” is still not instant. And the two-phase approach means there’s always a window where Google has seen your HTML but hasn’t yet rendered your JavaScript. For time-sensitive content—breaking news, flash sales, newly launched products—that delay can matter.

The deeper issue is that server-side rendering eliminates this complexity entirely. When your server delivers complete HTML, there’s no rendering queue, no second pass, no risk of partial indexing. Google gets everything on the first visit. Splitt himself has been clear on this point: for websites that are primarily about presenting information to users, requiring JavaScript is a drawback. It can break, cause problems, make things slower, and drain more battery on mobile devices.

Beyond Google, other traditional search engines like Bing and Yandex have even less sophisticated JavaScript rendering capabilities. Sites relying entirely on client-side rendering have always been taking a gamble with non-Google search visibility.

The AI Bot Revolution: Where the Gap Becomes a Chasm

Here is where the conversation shifts from “SSR is slightly better” to “SSR is essential.”

A landmark study by Vercel, analyzing over half a billion bot fetches, confirmed what many in the industry suspected: none of the major AI crawlers currently render JavaScript. Not OpenAI’s GPTBot. Not Anthropic’s ClaudeBot. Not Meta’s ExternalAgent. Not ByteDance’s Bytespider. Not PerplexityBot. Zero JavaScript execution across the board.

What makes this especially notable is that these crawlers do download JavaScript files—ChatGPT’s crawler, for example, fetches JS files about 11.5% of the time—but it never executes them. The bots are collecting the code as text, potentially for training purposes, but they cannot see the content that JavaScript creates.

The sole exception is Google’s AI infrastructure. Gemini, AI Overviews, and AI Mode all leverage Googlebot’s rendering pipeline, which means Google’s AI features can see JavaScript-rendered content just fine. Splitt confirmed this directly: Google’s AI crawler uses a shared rendering service with Googlebot.

But Google is the exception, not the rule. And as the AI discovery landscape fragments across ChatGPT, Perplexity, Claude, Grok, and others, being visible only to Google’s AI means you’re invisible to a rapidly growing share of how people find information.

Real-World Impact: What Happens When AI Can’t See Your Content

The consequences of client-side rendering for AI visibility aren’t theoretical. SEO consultant Glenn Gabe conducted detailed testing on a fully client-side-rendered website and documented the results across ChatGPT, Perplexity, and Claude. The findings were stark.

When asked to retrieve specific content from the site, all three AI platforms failed. ChatGPT reported it couldn’t read the content. Perplexity said it couldn’t find the content or returned access-denied errors—even though the site wasn’t blocking any AI bots. Claude similarly reported it couldn’t retrieve the page content. With JavaScript turned off, the pages were blank—and that’s exactly what the AI crawlers saw.

Even more telling: the site’s favicon wasn’t rendering correctly in AI citations. Both ChatGPT and Perplexity displayed generic default favicons when referencing the site. The favicon worked perfectly in Google and Bing—but AI platforms couldn’t access the JavaScript that rendered it.

For comparison, Gabe tested pages from other sites that didn’t rely on JavaScript for content rendering. Those pages were read, cited, and summarized accurately by all three AI platforms. The rendering method was the variable.

If your business depends on being cited by AI assistants—and increasingly, every business does—this isn’t an edge case. It’s a visibility crisis.

Pros and Cons: A Side-by-Side Comparison

Server-Side Rendering

Advantages

- Complete HTML on first request. Every crawler—whether it’s Googlebot, GPTBot, ClaudeBot, or PerplexityBot—receives fully rendered content on the first fetch. No second pass required. No rendering queue. No risk of partial indexing or invisible content.

- Universal AI visibility. Because AI crawlers read raw HTML and nothing more, SSR is the only rendering approach that guarantees your content is visible across the entire AI ecosystem—not just Google’s.

- Faster initial page loads. Users see content immediately rather than staring at a loading spinner while JavaScript executes. This directly impacts Core Web Vitals metrics like Largest Contentful Paint (LCP), which Google uses as a ranking signal.

- Better accessibility. Users with JavaScript disabled, older devices, or limited bandwidth can still access your content. Progressive enhancement becomes possible.

- Structured data reliability. Schema markup embedded in server-rendered HTML is immediately available to all crawlers. Splitt has confirmed that structured data helps give search engines more confidence in content, and AI platforms use it to build overviews, product comparisons, and featured answers.

Disadvantages

- Higher server load. Every request requires the server to render the page, which demands more computing resources than simply serving static assets. High-traffic sites need robust caching strategies and potentially more powerful hosting.

- Increased development complexity. Building and maintaining an SSR pipeline requires expertise in both server-side and client-side codebases. Hydration—the process of making server-rendered HTML interactive on the client—adds an additional layer of technical complexity.

- Less fluid interactivity. Pure SSR requires a round trip to the server for every page change. Without client-side JavaScript to handle transitions, the experience can feel less smooth compared to a single-page application.

Client-Side Rendering

Advantages

- Rich, app-like interactivity. Seamless page transitions, real-time data updates, offline capabilities through progressive web apps, and complex user interfaces are all natural strengths of CSR. For applications where the experience is the product—dashboards, collaborative tools, messaging platforms—this is hard to replicate with SSR alone.

- Lower server costs. The server primarily delivers static assets, shifting the rendering burden to the user’s device. This makes CSR applications easier and cheaper to host and scale, especially behind a CDN.

- Faster subsequent navigation. Once the JavaScript bundle is loaded, moving between pages within the application is nearly instantaneous because only data needs to be fetched, not entire new HTML pages.

Disadvantages

- Invisible to AI crawlers. This is the critical issue. GPTBot, ClaudeBot, PerplexityBot, and every other major AI crawler sees only the empty HTML shell. Your content, your metadata, your structured data—all of it is invisible unless JavaScript runs. And these bots don’t run JavaScript.

- Slower initial page load. Users must wait for the JavaScript bundle to download, parse, and execute before seeing any content. On mobile devices and slower connections, this can result in several seconds of blank screen or loading spinners—a direct hit to user experience and Core Web Vitals.

- Risk of partial or delayed indexing by Google. While Googlebot can render JavaScript, the two-phase crawling process means there’s always a lag between discovery and full rendering. Complex JavaScript that breaks or times out can result in content never being indexed at all.

- Fragile content delivery. If anything goes wrong during JavaScript execution—a network error during data fetching, a blocked third-party script, a browser incompatibility—the user sees nothing. Splitt has noted this risk explicitly: with CSR, if something goes wrong during transmission, the user won’t see any of your content.

The Middle Ground: Hybrid and Pre-Rendering Approaches

The good news is that this isn’t a binary choice. Modern web development offers several hybrid strategies that can give you the interactivity of client-side rendering with the crawlability of server-side rendering.

Hydration is the approach Splitt himself recommends. The server renders the initial HTML with full content, then client-side JavaScript “hydrates” that HTML by attaching event listeners and enabling interactivity. The user gets fast initial content; crawlers get complete HTML; and the interactive experience kicks in once JavaScript loads. Frameworks like Next.js, Nuxt, and SvelteKit make this pattern straightforward.

Static Site Generation (SSG) pre-renders pages at build time, producing plain HTML files that can be served from a CDN with zero server-side processing. For content that doesn’t change frequently—blog posts, documentation, landing pages—this is the fastest and most crawlable approach possible. Tools like Astro, Hugo, and Gatsby excel here.

Dynamic rendering is a Google-approved approach that serves fully rendered HTML to crawlers while delivering the client-side rendered version to human users. It’s a pragmatic bridge for sites that can’t easily migrate to SSR, though it adds infrastructure complexity and requires careful implementation to avoid being flagged as cloaking.

Pre-rendering services like Prerender.io offer a similar outcome by generating static HTML snapshots of JavaScript-rendered pages and serving those snapshots to bots. This can be a quick-win solution for existing CSR applications, though it requires careful maintenance to ensure the pre-rendered versions stay current.

What Smart Businesses Should Do Now

The strategic imperative is clear: if you want to be discoverable across both traditional search and the AI ecosystem, your critical content needs to be accessible in the initial HTML response. Here’s a practical roadmap.

- Audit your rendering. Disable JavaScript in your browser and load your most important pages. If the main content disappears, AI crawlers can’t see it either. Tools like the Rendering Difference Engine browser extension or Google’s URL Inspection Tool can help you identify what’s missing.

- Prioritize SSR for content pages. Blog posts, product pages, service descriptions, FAQs, and any page designed to attract organic traffic or AI citations should be server-rendered. Reserve client-side rendering for truly interactive features where it adds genuine value.

- Invest in structured data. Schema markup in server-rendered HTML gives search engines and AI systems extra confidence in your content. It powers rich results, AI Overviews, and product comparisons. As Splitt noted, structured data provides more information and more confidence—even though it doesn’t directly push rankings.

- Ensure your routing is crawler-friendly. If your page routing is handled entirely by client-side JavaScript, AI bots can’t follow links between your pages. Every important page needs to exist as a real URL that returns complete HTML.

- Think beyond Google. Google’s AI can render JavaScript. Nobody else’s can. If your optimization strategy only accounts for Googlebot, you’re leaving a growing portion of AI-driven discovery on the table. ChatGPT alone receives over four billion visits per month. Perplexity, Claude, and Grok are all growing rapidly. Optimizing only for Google is an increasingly risky bet.

- Monitor AI bot access. Check your server logs for GPTBot, ClaudeBot, PerplexityBot, and OAI-SearchBot activity. Understand which AI crawlers are visiting your site and whether they’re seeing your actual content or just empty shells.

The Bigger Picture: Being Mentioned Is the New Click

We’re living through a fundamental shift in how content is discovered. Traditional SEO was about ranking in a list of ten blue links. The emerging paradigm is about being cited, summarized, and recommended by AI systems that millions of people trust for answers.

In this new landscape, your rendering strategy isn’t just a technical implementation detail—it’s a business strategy decision. A beautifully designed, JavaScript-heavy website that’s invisible to AI crawlers is like having a gorgeous storefront on a street that half your potential customers can’t find on any map.

Google’s Martin Splitt said it best when discussing the choice between rendering strategies: the right approach depends on what your website does. If you’re building a web application with complex interactivity, client-side rendering has its place. But if your goal is to present information to users—and, critically, to be discoverable by the systems that increasingly connect users to information—server-side rendering isn’t just better. It’s essential.

The web is evolving. AI crawlers will get more sophisticated over time. But right now, today, the gap between Google’s JavaScript capabilities and everyone else’s is enormous. And the businesses that recognize this and act on it will have a significant competitive advantage in both traditional search and the AI-driven discovery channels that are reshaping how people find, evaluate, and choose the products and services they need.

Sources & Further Reading

- Search Engine Journal – “Google’s JavaScript Warning & How It Relates to AI Search” (January 2025)

- Search Engine Journal – “How Rendering Affects SEO: Takeaways From Google’s Martin Splitt” (January 2025)

- Google Search Central (YouTube) – Martin Splitt on rendering strategies for SEO

- Vercel – “The Rise of the AI Crawler” (December 2024)

- GSQI / Glenn Gabe – “AI Search and JavaScript Rendering” case study (2025)

- Prerender.io – “Understanding Web Crawlers: Traditional vs. OpenAI’s Bots” (2025)

- seo.ai – “Does ChatGPT and AI Crawlers Read JavaScript?” (2025)

- Daydream – “How OpenAI Crawls and Indexes Your Website” (January 2026)

- Botify – “Client-Side vs. Server-Side JavaScript Rendering: Which Is Better for SEO?”