You’ve worked your behind off to get your website to the top of Google’s rankings. But while 96% of AI citations come from sources with strong E-E-A-T signals, most top-ranking pages lack the structural architecture AI systems need to extract and cite them. Your rankings dashboard might look pristine, but in the world of AI-powered search: where ChatGPT, Perplexity, Google’s AI Overviews, and Gemini are answering billions of queries: ranking position has become necessary but no longer sufficient.

The data is unambiguous. Brand search volume now carries a correlation of 0.334 to AI citations: significantly stronger than ranking position alone. Meanwhile, content with strong vector embedding alignment (cosine similarity scores above 0.88) shows 7.3× higher citation rates compared to poorly aligned content, regardless of where it ranks.

Traditional SEO metrics are lying to you. Not intentionally: they’re simply measuring the wrong thing.

The Ranking-Citation Disconnect No One’s Talking About

The assumption that “if I rank, I’ll get cited” has created a massive blind spot in SEO strategy. Let me show you why that assumption is fundamentally broken.

AI systems don’t crawl search results pages and work their way down from position one. They retrieve information through semantic vector spaces, entity graphs, and passage-level relevance scoring: processes that happen completely independently of traditional ranking algorithms.

When ChatGPT cites Wikipedia at 7.8% of all citations while Perplexity heavily favors Reddit at 6.6%,[5] they’re not doing this because those sources rank higher. They’re doing it because those platforms have structural characteristics AI systems can reliably parse, extract, and attribute.

Your competitor ranking at position seven with clear entity markup, quotable passage structure, and corroborating third-party mentions will outperform your position-two page with 3,000 words of undifferentiated prose every single time.

The Four Retrieval Blockers Killing Your AI Visibility

Most SEO audits miss these entirely because they’re optimized for crawlers, not for AI retrieval systems. Here’s what actually blocks AI citation, based on analysis of over 7,000 citations:

1. Passage Ambiguity and Poor Extractability

AI systems need atomic, self-contained passages that answer questions without surrounding context. If your content requires reading three paragraphs to understand one concept, it won’t get cited: even if it ranks first.

The fix requires passage-level optimization: each key point must stand alone with clear subjects, explicit relationships, and minimal pronoun references. When we conduct AI SEO Audits, one of the first things we evaluate is whether your content can be extracted in 2-3 sentence chunks that remain coherent.

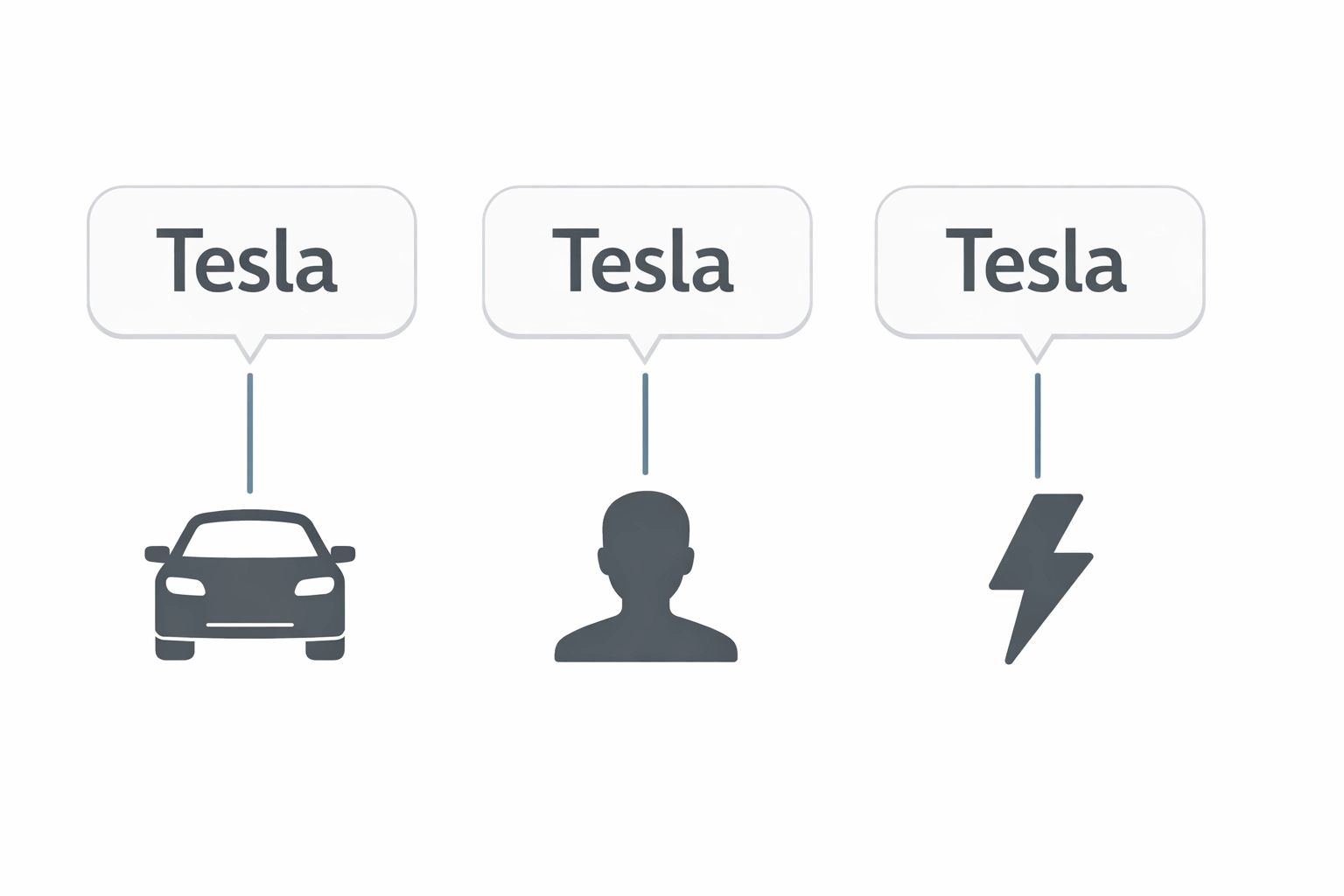

2. Entity Disambiguation Failures

Pages with 15+ recognized entities show 4.8× higher selection probability.[6] But here’s the catch: those entities must be disambiguated: clearly defined with proper noun clarity, contextual specificity, and ideally, schema markup that explicitly declares what entities mean.

If you mention “Tesla” without clarifying whether you mean the company, the person, or the unit of measurement, AI systems will deprioritize your content as ambiguous. Entity clarity isn’t optional anymore: it’s a mandatory filter.

3. Semantic Misalignment at the Vector Level

This is where most traditional SEO strategies completely fall apart. You can rank for a keyword while being semantically misaligned with what users actually want to know.

Content with cosine similarity scores below 0.70 to the query intent rarely gets cited, regardless of ranking.[6] AI systems are evaluating your content in multi-dimensional vector space, measuring how closely your semantic meaning aligns with the conceptual core of the query.

Keyword optimization alone cannot achieve this. You need content that structurally mirrors the information architecture of the question being asked.

4. Weak External Corroboration Signals

AI citation isn’t a solo performance: it’s a reputation referendum. Analysis shows that AI systems heavily weight whether other authoritative sources confirm, link to, or reference your claims.

A top-ranking page from an isolated brand with no third-party validation, no citations from industry publications, and no mentions in authoritative forums will consistently lose to lower-ranking pages from brands with robust external corroboration footprints.

Your entity needs to exist beyond your own domain. Reddit threads, industry publication mentions, Wikipedia references, academic citations: these create the “reference gravity” that AI systems use to validate whether your content is trustworthy enough to cite.

How Each AI Platform Creates Different Citation Barriers

The fragmentation of AI search means you’re no longer optimizing for one algorithm: you’re optimizing for platform-specific retrieval preferences that often conflict.

Google’s AI Overviews prioritize sites that rank for related “fan-out” queries, with pages ranking for these subtopic searches showing 161% higher citation likelihood. ChatGPT heavily weights conversational structure and direct answer passages. Perplexity favors discussion-based content with multiple viewpoints.

This creates a strategic problem: optimizing exclusively for one platform’s preferences can hurt performance on others. The only sustainable approach is building retrieval-ready content architecture that meets baseline requirements across all platforms while monitoring performance on each.

That’s where measurement becomes essential: and where most teams are flying blind.

Measuring the Gap: Why You Need AI Citation Tracking

Traditional analytics cannot tell you whether AI systems are citing you, misquoting you, citing competitors instead, or ignoring your content entirely. You need a completely separate measurement layer.

Citemetrix exists specifically to close this visibility gap. It tracks your brand mentions and citations across ChatGPT, Perplexity, Gemini, and Google AI Overviews: monitoring not just if you’re being cited, but which pages, which queries, and which competitors are winning citations you should own.

The measurement reveals patterns traditional SEO tools miss entirely:

- Citation frequency by topic cluster (which content themes AI systems trust you on)

- Competitor citation overlap (where you rank well but competitors get cited instead)

- Misattribution incidents (where AI systems cite your content but attribute it incorrectly)

- Temporal citation patterns (whether your visibility is growing or declining as AI systems evolve)

When we combine AI SEO Audit findings with Citemetrix data, we can pinpoint exactly why high-ranking pages aren’t converting into AI citations: and build surgical fixes that directly address the retrieval blockers holding you back.

What AI SEO Audits Actually Reveal

Standard technical audits evaluate crawlability, indexation, and ranking factors. AI SEO Audits evaluate retrievability, extractability, and citation probability.

Here’s what we assess that traditional audits miss:

✓ Passage-level coherence scoring (can individual paragraphs stand alone?)

✓ Entity density and disambiguation quality (are key concepts clearly defined?)

✓ Semantic vector alignment (does your content structurally match query intent?)

✓ External corroboration footprint (what validates your authority beyond your site?)

✓ Multi-platform retrieval compatibility (will this work across ChatGPT, Perplexity, and AI Overviews?)

✓ Citation attribution clarity (can AI systems correctly attribute information to you?)

The audit produces a retrieval readiness score for each critical page, identifying specific structural blockers and prioritizing fixes based on traffic value and competitive citation gaps revealed by Citemetrix data.

Most clients discover that 60-70% of their “optimized” content has significant retrieval blockers: even on pages ranking in the top three positions.

The New Success Metric: Citation Share vs. Ranking Share

We’re entering a bifurcated search landscape where ranking share and citation share are measured independently: and where citation share increasingly determines whether you control the narrative around your expertise.

If AI systems cite competitors when users ask about your core topics, those competitors become the default authority: regardless of where you rank. Brand perception is being rewritten in real-time by which sources AI platforms choose to surface and attribute.

The strategic imperative is clear: build content that ranks and gets retrieved. Optimize pages for crawlers and for vector-based semantic matching. Establish authority on your site and across the broader web ecosystem.

This requires fundamentally different content architecture, ongoing citation monitoring, and regular retrieval audits as AI systems continue to evolve their selection criteria.

Stop Optimizing for Ghosts

Traditional rankings are becoming ghost metrics: visible in dashboards but increasingly disconnected from actual traffic and brand authority. The future of search visibility is being written right now in how AI systems decide which sources to trust, cite, and elevate.

If you’re still measuring success purely by ranking position while AI citations go untracked, you’re optimizing for a reality that no longer determines outcomes.

We help clients build retrieval-ready content strategies backed by Citemetrix measurement and comprehensive AI SEO audits. The gap between where you rank and whether you get cited is measurable, fixable, and essential to address now: before your competitors establish citation dominance in your category.

Want to know where your content stands? Book a consultation and we’ll run a citation gap analysis against your top 10 ranking pages: showing you exactly where AI systems are choosing competitors instead of you, and why.