AI bots are crawling your website right now, and you probably don’t even know it. While traditional search engine crawlers like Googlebot have been on SEO professionals’ radar for decades, a new generation of artificial intelligence crawlers from OpenAI, Anthropic, Perplexity, and other AI platforms are silently consuming your content for training data, real-time citations, and knowledge base updates.

Here’s the thing: 67% of websites have no visibility into which AI platforms are accessing their content or how frequently. This blind spot is costing businesses valuable insights into how their content performs in the age of generative search and large language models.

The solution? Screaming Frog’s Log File Analyzer now includes specialized features for monitoring AI bot activity, giving you unprecedented visibility into how these next-generation crawlers interact with your site.

Why Monitoring AI Bots Is No Longer Optional

Unlike traditional analytics tools that only track human visitors, log file analysis reveals the complete picture of who’s accessing your content. AI platforms don’t behave like typical users: they crawl systematically, often targeting specific content types, and their activity patterns can significantly impact server resources.

The numbers tell the story: Websites in competitive industries are seeing AI bot traffic account for 15-25% of total server requests. That’s bandwidth consumption, server load, and content consumption happening completely outside your analytics dashboard.

More importantly, understanding which content AI platforms prioritize helps optimize for generative search results. When ChatGPT or Perplexity cites your content, that traffic originated from systematic crawling patterns you can now track and analyze.

Getting Started: Installation and Setup Requirements

The Screaming Frog Log File Analyzer requires a machine running Windows, Mac, or Linux with Java 17 and at least 2GB of RAM available. The tool stores log event data in a local database, allowing it to process massive log files containing millions of events without performance degradation.

Critical requirement: Maintain at least 1GB of free hard drive space. Log file databases can grow substantial when analyzing extended periods or high-traffic sites.

Installation is straightforward: download the application from Screaming Frog’s website and follow the standard installation process for your operating system. The tool integrates seamlessly with existing server log formats from Windows, Apache, and Nginx servers.

Importing and Configuring Your Log Files

Step 1: Import Your Server Logs

The import process couldn’t be simpler. Drag and drop your raw server log files directly into the application window. The tool automatically detects and parses common log formats without requiring manual configuration. You can import single files, multiple files simultaneously, or compressed archives (ZIP or GZIP formats).

Step 2: Create Your Project and Configure AI Bot Tracking

When you import logs, you’ll be prompted to create a new project. This is where the magic happens for AI bot monitoring. By default, the Log File Analyzer only analyzes traditional search engine bots, so manual configuration is essential for AI platforms.

Navigate to the ‘User Agents’ tab during project setup. Here, you’ll see two panes: ‘Available’ user agents and ‘Selected’ user agents. The tool includes built-in presets for major AI platforms including:

- OpenAI crawlers (GPTBot)

- Perplexity AI bots

- Anthropic crawlers (Claude-Web)

- Common AI training bots

- Research platform crawlers

Use the arrow controls to move desired AI user agents from ‘Available’ to ‘Selected’ for your analysis.

Pro tip: Enable ‘Verify Bots When Importing Logs’ if you suspect fraudulent bot activity. While this feature currently applies primarily to search engine bots (with AI bot verification planned for future updates), it adds an extra layer of authenticity verification by cross-checking against publicly confirmed IP addresses.

Analyzing AI Bot Activity: Understanding the Data Tabs

Once import completes, the Log File Analyzer organizes AI bot activity across multiple specialized tabs, each offering unique insights into crawler behavior.

Overview Tab: Your AI Bot Dashboard

The Overview tab provides immediate visibility into AI bot activity with key performance metrics:

- Total AI bot requests vs. traditional search engine requests

- Unique URLs accessed by AI platforms

- Average bytes transferred per AI bot request

- Server response time patterns for AI crawlers

The charts visualize crawl activity patterns over time, revealing when AI platforms are most active on your site. Many AI bots operate on different schedules than traditional search crawlers, often showing increased activity during off-peak hours.

URLs Tab: Content Performance Analysis

This tab shows every unique URL discovered in your logs with AI bot-specific metrics:

- Request frequency by AI platform

- HTTP response codes for AI bot requests

- Bytes transferred to each AI platform

- Server response times per URL for AI crawlers

Here’s what this data reveals: AI bots often show preference patterns for specific content types. Blog posts, product descriptions, and FAQ pages typically receive disproportionate attention from AI platforms compared to navigation or administrative pages.

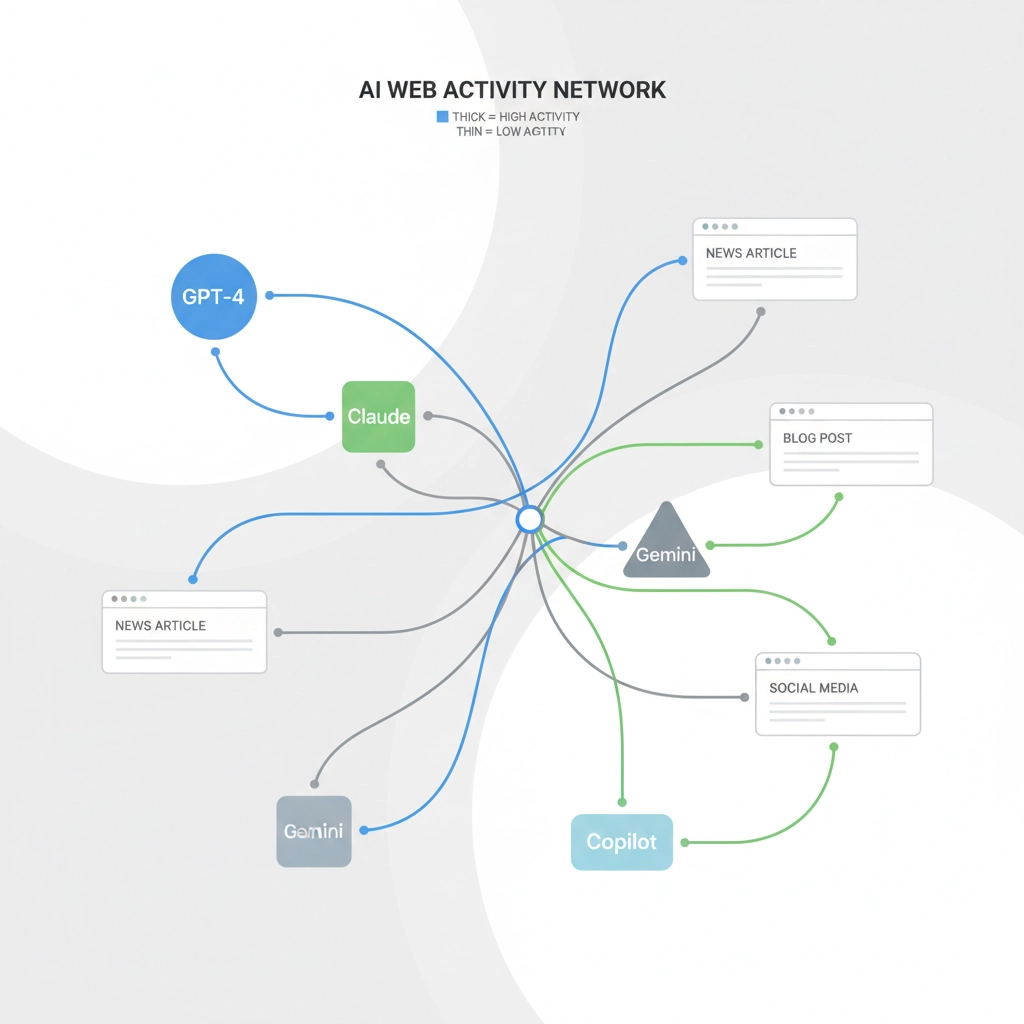

User Agents Tab: Platform-Specific Insights

The User Agents tab aggregates data by bot type, showing total requests, unique URLs accessed, error rates, and response code distributions for each AI platform. This comparison view reveals which AI platforms are most active on your site and how their crawling behavior differs.

Key insight: OpenAI’s crawlers typically access broader content ranges, while specialized AI platforms often focus on specific content categories relevant to their training objectives.

Response Codes Tab: Technical Health Monitoring

This tab breaks down HTTP status codes specifically for AI bot requests. The ‘Inconsistent’ column flags URLs returning different response codes across multiple AI bot visits: a critical indicator of technical issues that could impact how AI platforms perceive your content quality.

Why this matters: AI platforms often use response code patterns as quality signals. URLs consistently returning 4XX or 5XX errors to AI bots may receive lower priority in future crawls or training data selection.

Advanced Analysis: Combining Crawl Data with Log Insights

For comprehensive AI bot analysis, combine your log file data with SEO Spider crawl data. Export the ‘Internal’ tab from a Screaming Frog SEO Spider crawl and import it directly into the Log File Analyzer’s ‘Imported URL Data’ tab.

This integration enables powerful filtering options:

“Matched With URL Data” shows URLs appearing in both your site crawl and AI bot logs, revealing which discoverable pages AI platforms actually prioritize.

“Not In URL Data” displays URLs accessed by AI bots but not found in your crawl: typically orphaned pages, old redirects, or URLs discovered through external backlinks.

This comparison often reveals surprising insights about AI bot behavior. AI platforms frequently access historical content, archived pages, and deep-linked resources that might not appear in standard site crawls but remain valuable for training or reference purposes.

Practical Optimization Strategies Based on AI Bot Data

Content Strategy Optimization

AI bot crawling patterns reveal content preferences you can leverage for optimization. Pages receiving frequent AI bot attention often share characteristics:

- Comprehensive, authoritative content covering topics thoroughly

- Well-structured information with clear headings and logical flow

- Recent publication dates or regular content updates

- High-quality external links and references

Technical Performance Monitoring

Use response time data to identify pages where AI bot requests cause server strain. AI bots often crawl more aggressively than human users, potentially impacting site performance during peak activity periods.

Bandwidth and Resource Management

Track bytes transferred to different AI platforms to understand resource consumption patterns. Some organizations implement selective access policies based on AI bot behavior and resource usage patterns revealed through log analysis.

The Strategic Advantage of AI Bot Monitoring

Organizations implementing systematic AI bot monitoring gain significant competitive advantages in the evolving search landscape. Understanding which content AI platforms prioritize enables strategic content optimization for generative search results and AI-powered citations.

The fundamental shift is this: Traditional SEO focused on optimizing for search engine crawlers, but the future requires optimizing for AI platforms that directly influence how your content appears in conversational search results and AI-generated summaries.

At Expert SEO Consulting, we’ve seen clients achieve measurable improvements in AI citation frequency and generative search visibility through strategic log file analysis and AI bot optimization.

Ready to gain complete visibility into how AI platforms interact with your content? Our AI Content Audit services include comprehensive log file analysis and AI bot monitoring recommendations tailored to your specific industry and content strategy.

Book a consultation to discover how AI bot optimization can enhance your search visibility in the age of generative AI.